The Intelligent Virtual Assistant (IVA)

The idea of IVAs is not new. IVA adoption has grown recently owing to:

- Tech Improvements: Speech to text / text to speech / NLU tech improving to a point where conversation interactions with a “chatbot” become possible.

- Availability: Most OSs come with an embedded IVA across Siri, Google Assistant, Bixby, Alexa, or Cortana.

Apart from Google Assistant, though, the naming of these IVAs tempts users to anthropomorphize, and ascribe agency to them, which is clearly misguided.

Agency

I will stop here to define Agency as the ability to:

- Think (“Intelligence”)

- Act on that thinking (Free Will)

IVAs as they stand today, simply do not have such capabilities.

Most importantly, neither does Generative AI.

One could argue that we, so-called sentient beings, are just wetware hosting Generative AIs and ergo lack agency themselves, but this is a discussion for another day.

Look at what ChatGPT said when I asked…

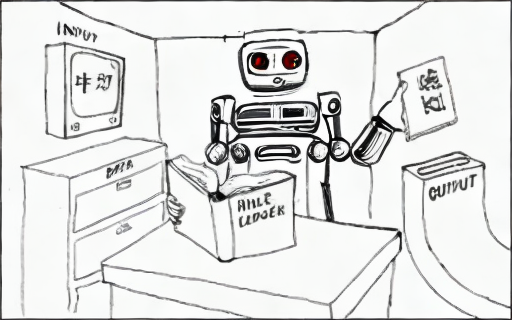

The Chinese Room argument put forward by John Searle in 1980 best explains why Generative AI is bereft of true understanding/agency:

- You don’t know a word of Chinese(sic).

- You’re locked up in a room with a mail slot and receive letters exclusively written in kanji.

- There is also a rule book present inside the room, which you can look up, and which has rules for how to respond to each character or series of characters that you see with another equivalent series of kanji characters.

- You blindly follow these rules to produce a series of responses that are meaningful to those outside the room.

Does this mean that you understand Chinese? Clearly NOT.

Well, Generative AI does exactly this. Sure, the rule book is “emergent” from weights in a neural network rather than explicit programming, but that doesn’t hurt the argument in any way.

Analyzing Samantha

Samantha in Her (2013) displays a wide range of capabilities. Let’s try to break that down into categories.

Group 1: Objective in Nature & Achievable today

- Manage Contacts

- Personal Organizer

- Proof reading content for grammar/spelling

- Calendar reminders

- Make Hotel reservations

- Perform background checks

Group 2: Subjective in Nature & Achievable today

- Email classification as important/unimportant

- Read and auto-reply to only some emails

- Edit / summarize content for style

- Cherry pick written content as “the best”

- Picking a present for someone

Group 3: Achievable with Generative AI

- High quality romantic conversations

- Companionship / Virtual psychotherapist

Group 4: Probably won’t happen

- Interact with external agents to get content published

- “OSes going away”

Reimagining Psychotherapy with Generative AI

With the stigma around seeking help for mental health issues fast reducing, I would like to posit that Generative AI will help develop the next generation of psychotherapy. Huge disclaimer here that I have no medical expertise in the area of mental wellness.

Model 1: The Therapist’s Apprentice

The human user seeking a therapy session interacts with a Generative AI agent who is trained to be a patient listener and to ask probing questions.

Ideally, beyond text, the agent is trained to read the user’s body language, breathing patterns, heart rate, tonality, and other cues.

During the course of this session, a human therapist is available in the background for escalations and can take over the interaction seamlessly at any point in time.

At the end of each session, the agent summarizes the interaction, and snips out key sections of the conversation for human therapist review.

Model 2: Dear Diary

The human user has a personalized offline virtual assistant, which has been trained in psychotherapy.

The human user interacts with the virtual assistant on a daily basis. This interaction is meant to be akin the erstwhile popular habit of making a diary entry every day.

Conversations are completely private, but the virtual assistant is trained to:

- suggest reaching out to a human psychotherapist for a session, when certain thresholds of distress are breached

- escalate to law enforcement / other agencies when there are suggestions of self-harm or violence

A fully trained psychotherapist AI can be made available offline, and take up less than 1 GB of hardware space. Privacy guarantees that the user is able to speak freely without reservation as opposed to a human psychotherapist, who needs to first earn the user’s trust.

Only with the user’s permission, and when a therapy session is scheduled, will conversation logs be selectively shared with an appropriate third party.

Possible points of failure

The cost of failure in psychotherapy can be quite high in an edge case, especially when users are experiencing thoughts of self-harm or violence that go undetected. Further, regulatory approvals can be difficult to obtain.

To date, the best example of AI led psychotherapy has been Wysa. That said, I expect technology to become vastly improved and AI psychotherapists to be widely available and inexpensive.